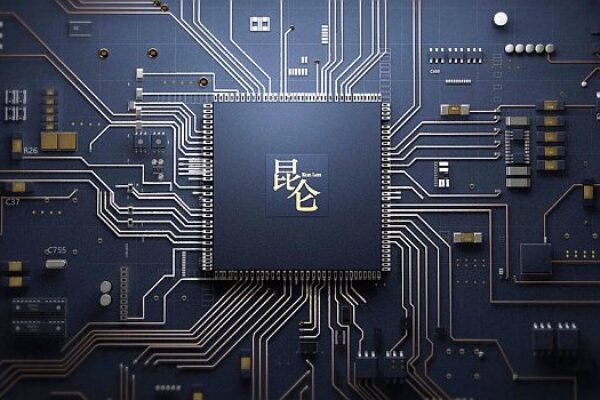

Cloud-to-edge AI chip targets large-scale workloads

The device, called “Kunlun,” is built to accommodate high-performance requirements of a wide variety of AI scenarios, the company says. It can be applied to both cloud and edge scenarios, such as data centers, public clouds, and autonomous vehicles.

The chip leverages the company’s AI ecosystem, which includes AI scenarios like search ranking and deep learning frameworks like PaddlePaddle. Kunlun comprises thousands of small cores and has a computational capability that is nearly 30 times faster than the company’s FPGA-based AI accelerator developed in 2011.

Key specifications include 14-nm Samsung engineering, a 512-GB/second memory bandwidth, and 260 TOPS computing performance while consuming 100-W of power. In addition to supporting common open source deep learning algorithms, says the company, the Kunlun chip supports a wide variety of AI applications, including voice recognition, search ranking, natural language processing, autonomous driving, and large-scale recommendations.

The company plans to continue to iterate upon this chip, developing it progressively to enable the expansion of an open AI ecosystem. As part of this, the company says, it will continue to create “chip power” to meet the needs of various fields including intelligent vehicles, intelligent devices, voice recognition, and image recognition.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News