Power efficient AI inference processing comes to the Cloud

Qualcomm Technologies is also supporting developers with a full stack of tools and frameworks for each of their cloud-to-edge AI products. Facilitating the development of the ecosystem in this distributed AI model will help enhance a variety of end user experiences, including personal assistants for natural language processing and translations, advanced image search, and personalized content and recommendations.

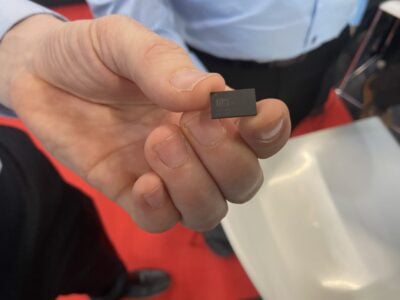

Cloud AI 100 claims to offer more than 10x performance per watt over the industry’s most advanced AI inference systerms deployed today. With the performance and power advantages of a 7nm process node, the highly efficient chip is specifically designed for processing AI inference workloads with support for industry leading software stacks, including PyTorch, Glow, TensorFlow, Keras, and ONNX.

“Our Qualcomm Cloud AI 100 accelerator will significantly raise the bar for the AI inference processing relative to any combination of CPUs, GPUs, and/or FPGAs used in today’s data centers,” said Keith Kressin, senior vice president, product management, Qualcomm Technologies, Inc. “Furthermore, Qualcomm Technologies is now well positioned to support complete cloud-to-edge AI solutions all connected with high-speed and low-latency 5G connectivity.”

The Qualcomm Cloud AI 100 is expected to begin sampling to customers in 2H 2019.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News